Class Separation Analyzer

Patrick Faley created a tool based on this paper.

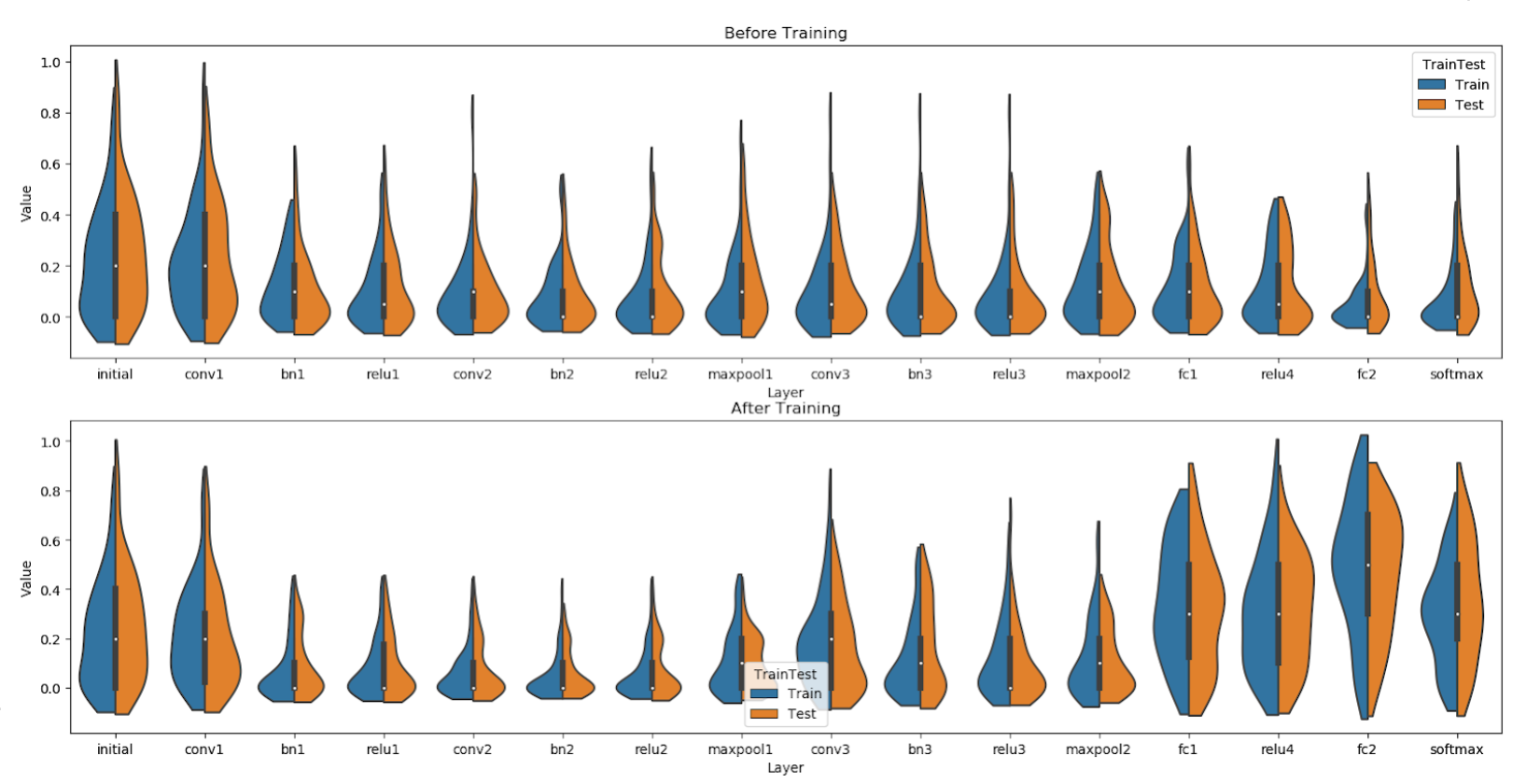

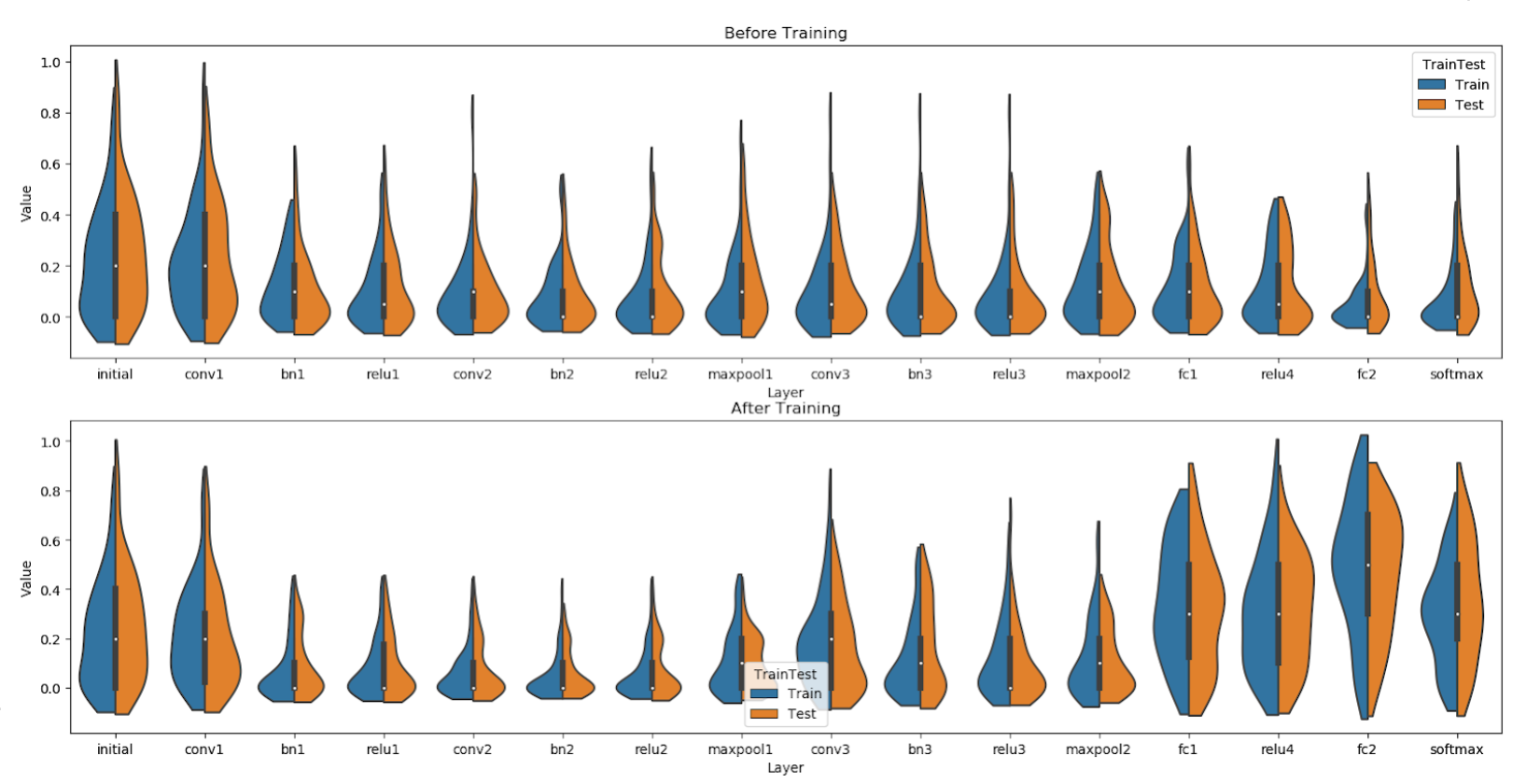

which allows users to analyze neural networks written in Pytorch to measure how well a network separates different

classes during training. With this information, users can ensure that models are learning to distinguish between classes

and determine which specific layers contribute the most to class separation. This can be used to make informed decisions

about model utility and layer composition. The repository can be found

here.

scroll to me

Join Us

We are looking for motivated graduate students and postdoctoral scholars who are interested in the general area of Computer Architecture Circuit Design and Machine Learning.

We are actively looking for motivated students who are interested in pursuing Ph.D. degrees in the topic of exploring algorithms, architectures, or circuit-level techniques to develop energy-efficient intelligent systems.

Helpful Experience

- Hardware Design for Machine Learning and Deep Neural Networks

- Reinforcement Learning

- Generative Models

- Strong coding abilities (C++ and Python)

- Deep Learning platforms (PyTorch or Tensorflow)

- VLSI Circuit Design and Computer Architecture

- CMOS Chip Tape-Out and Testing

Open Positions

At the Intelligent Microsystems Lab, our research traverses various levels of abstraction including systems, circuits, and algorithm design residing at the interface of machine intelligence and cyber-physical systems. We study neuromorphic and other non-von Neumann architectures where we leverage energy efficiencies in analog-CMOS and alternative (Beyond CMOS) computing structures to deliver orders of magnitude improvement in the performance of adaptive and learning systems. We work closely with groups developing new devices and materials to help us develop new chips aimed at achieving the limits of energy efficiency.

How to apply

If you are a postdoc candidate, please send us an email containing your CV, research statement, and two names for requesting reference letters.

scroll to me